National Science Foundation TeraGrid Workshop on Cyber-GIS:

http://www.cigi.uiuc.edu/cybergis/index.php

February 2-3, 2010 - Washington, DC

The NSF Cyber-GIS workshop will take place in conjunction with the 2010 UCGIS Winter Meeting at Doubletree Hotel, Washington, DC. The workshop will focus on the following themes and topics:

Complex geospatial systems and simulation of geographic dynamics

Computational intensity of spatial analysis and modeling

Data-intensive geospatial computation and visualization

High-performance, distributed, and/or collaborative GIS

Geospatial ontology and semantic web

Geospatial middleware, Clouds, and Grids

Open source GIS

Participatory spatial decision support systems

Science drivers for, and applications of Cyber-GIS

Spatial data infrastructure

For more information, please contact workshop co-chairs:

Shaowen Wang

National Center for Supercomputing Applications

University of Illinois at Urbana Champaign

shaowen@illinois.edu

Nancy Wilkins-Diehr

San Diego Supercomputing Center

University of California at San Diego

wilkinsn@sdsc.edu

Thursday, December 24, 2009

Monday, December 21, 2009

HealthGrid 2010 conference: call for papers, posters and workshops - deadline Feb 15, 2010

HealthGrid 2010 conference: call for papers, posters and workshops

conference web site: http://paris2010.healthgrid.org/

KEY DATES

Call for papers, posters and workshops closes:

February 15th 2010

The eighth HealthGrid conference will take place June 28-30 2010 at University Paris XI in Orsay (France). Every year, this conference is the opportunity to discuss the state of the art for the integration of grid practices into the fields of biology, medicine and health. This year, it will take place just at the time the European Grid Initiative will start federating the national grid initiatives and propose its resources to the Research Infrastructures. The conference program will include a number of high profile keynote presentations complemented by a set of refereed papers, which will be selected through the present call. Out of the selected papers, the best will be invited for oral presentations and the others for poster presentations. All the selected papers will be published in the book series „Studies in Health Technology and Informatics“ published by IOS Press and referenced in Medline, Scopus, EMCare and Cinahl Databases

Call for papers and posters

Contributions should be made as full research papers (up to 5,000 words in length and maximum 10 pages). Selection for oral or poster presentation will be based on the content of the submitted papers, the originality of their contribution, technical quality, style and clarity of presentation, and importance to the field. Oral presentations could include demonstrations.

All papers must be submitted electronically. Please refer to the conference website for upload instructions. The guidelines for authors and support tools are those of the series „Studies in Health Technology and Informatics“ (see URLs below). Papers are invited in, but not limited to, the following areas and topics:

A: ACCESSIBILITY

Challenges to making grids more accessible to bio-medical users

Scientific gateways

Workflow engines

Grid portals

Grid platforms

B: CORE TECHNOLOGIES AND KNOWLEDGE INTEGRATION

Grid technology versus web applications

Data privacy: confidentiality in distributed medical information systems – and the security challenges

Knowledge integration – knowledge management

Semantic techniques and the challenge of integrating heterogeneous biomedical data

Visualization in Grids

C: APPLICATIONS

Bioinformatics

Biomedical informatics

Medical imaging

Public health informatics

Genetics and epidemiological studies

Pharmaceutical R&D: drug discovery, clinical tests

Grid computing and the Virtual Physiological Human (VPH)

D: SOCIO ECONOMIC ASPECTS

Grid business aspects: sustainability and go-tomarket strategies

Experiences on production

Grid used in real business

Grid sociology: how to win society for Grids?

E: THE FUTURE OF GRIDS

Experiences with GP-GPU

Cloud computing, on demand computing

Nanomedecine

Call for workshops

This year, the conference Program Committee calls for workshops. On Monday afternoon, June 28th 2010, parallel workshops are scheduled from 2.30 pm to 6pm. Workshop proposals should include the following information:

Topic: the workshop topic should be directly related to the conference topics listed in this call.

Duration: all workshops selected through this call will take place Monday afternoon. Duration can be 90 minutes or 210 minutes (including coffee break).

Targeted audience: what is the expected attendance (for room allocation) ?

Format: do you wish to invite the speakers or to call for contributions? The contributions to the workshops will not be included in the conference proceedings.

Name, affiliation and email address of the workshop submitter.

conference web site: http://paris2010.healthgrid.org/

KEY DATES

Call for papers, posters and workshops closes:

February 15th 2010

The eighth HealthGrid conference will take place June 28-30 2010 at University Paris XI in Orsay (France). Every year, this conference is the opportunity to discuss the state of the art for the integration of grid practices into the fields of biology, medicine and health. This year, it will take place just at the time the European Grid Initiative will start federating the national grid initiatives and propose its resources to the Research Infrastructures. The conference program will include a number of high profile keynote presentations complemented by a set of refereed papers, which will be selected through the present call. Out of the selected papers, the best will be invited for oral presentations and the others for poster presentations. All the selected papers will be published in the book series „Studies in Health Technology and Informatics“ published by IOS Press and referenced in Medline, Scopus, EMCare and Cinahl Databases

Call for papers and posters

Contributions should be made as full research papers (up to 5,000 words in length and maximum 10 pages). Selection for oral or poster presentation will be based on the content of the submitted papers, the originality of their contribution, technical quality, style and clarity of presentation, and importance to the field. Oral presentations could include demonstrations.

All papers must be submitted electronically. Please refer to the conference website for upload instructions. The guidelines for authors and support tools are those of the series „Studies in Health Technology and Informatics“ (see URLs below). Papers are invited in, but not limited to, the following areas and topics:

A: ACCESSIBILITY

Challenges to making grids more accessible to bio-medical users

Scientific gateways

Workflow engines

Grid portals

Grid platforms

B: CORE TECHNOLOGIES AND KNOWLEDGE INTEGRATION

Grid technology versus web applications

Data privacy: confidentiality in distributed medical information systems – and the security challenges

Knowledge integration – knowledge management

Semantic techniques and the challenge of integrating heterogeneous biomedical data

Visualization in Grids

C: APPLICATIONS

Bioinformatics

Biomedical informatics

Medical imaging

Public health informatics

Genetics and epidemiological studies

Pharmaceutical R&D: drug discovery, clinical tests

Grid computing and the Virtual Physiological Human (VPH)

D: SOCIO ECONOMIC ASPECTS

Grid business aspects: sustainability and go-tomarket strategies

Experiences on production

Grid used in real business

Grid sociology: how to win society for Grids?

E: THE FUTURE OF GRIDS

Experiences with GP-GPU

Cloud computing, on demand computing

Nanomedecine

Call for workshops

This year, the conference Program Committee calls for workshops. On Monday afternoon, June 28th 2010, parallel workshops are scheduled from 2.30 pm to 6pm. Workshop proposals should include the following information:

Topic: the workshop topic should be directly related to the conference topics listed in this call.

Duration: all workshops selected through this call will take place Monday afternoon. Duration can be 90 minutes or 210 minutes (including coffee break).

Targeted audience: what is the expected attendance (for room allocation) ?

Format: do you wish to invite the speakers or to call for contributions? The contributions to the workshops will not be included in the conference proceedings.

Name, affiliation and email address of the workshop submitter.

Tuesday, November 24, 2009

Code-A-Thon

I attended the NHIN CONNECT Code-A-Thon in Portland, Oregon last week. It was two days of developers planning out and working on the next release of the NHIN CONNECT software (v2.3). There's a wiki up with notes from the two days worth of sessions.

But the interesting, relevant piece was when they started talking about future architecture. Two of the future topics (out of probably 20 or so) were grid computing and cloud computing. Because of the distributed nature of NHIN (CONNECT nodes everywhere), we had a short discussion about how data and computing power can be spread around in a distributed manner. Specifically, the question of hadoop and map-reduce was brought up about how jobs can be spread out over NHIN and NHIN-compatible systems.

On the whole, a pretty remarkable mini-con. This is a new approach to Federal open source projects and refreshing that OSS has come so far.

But the interesting, relevant piece was when they started talking about future architecture. Two of the future topics (out of probably 20 or so) were grid computing and cloud computing. Because of the distributed nature of NHIN (CONNECT nodes everywhere), we had a short discussion about how data and computing power can be spread around in a distributed manner. Specifically, the question of hadoop and map-reduce was brought up about how jobs can be spread out over NHIN and NHIN-compatible systems.

On the whole, a pretty remarkable mini-con. This is a new approach to Federal open source projects and refreshing that OSS has come so far.

Labels:

grid computing,

NHIN,

nhin connect,

open source

Wednesday, November 4, 2009

Vietnam welcomes three new grid sites; hospitals get new ‘HOPE’

.... HOPE (HOspital Platform for E-health) developed jointly at CNRS and HealthGrid in France, allows hospital sites to exchange medical information. HOPE is now installed at the Institute of Information Technology in Ho Chi Minh City, formerly Saigon, for testing. All going well, it will be installed in the primary Ho Chi Minh hospital.... read more..

http://www.isgtw.org/?pid=1002120

http://www.isgtw.org/?pid=1002120

Tuesday, October 27, 2009

Friday, October 23, 2009

PHGrid Community Update

To the PHGrid Community:

Related to significant organizational change currently underway, the internal team supporting the PHGrid activities within NCPHI has transitioned to other projects. To assist in this transition, we have provided many updates to PHGrid documentation (technical and project) posted to the PHGrid wiki (http://wiki.phgrid.net), and to PHGrid-related software in the Google code repository. If anyone has any questions relating to this change, or PHGrid software / services, please don't hesitate to contact me. We look forward to continued PHGrid research activities upon completion of the reorganization. It has been my sincere pleasure to work with the NCPHI PHGrid team (Brian, John, Peter, Dan, Chris, Moses, Joseph).

-- Tom

Related to significant organizational change currently underway, the internal team supporting the PHGrid activities within NCPHI has transitioned to other projects. To assist in this transition, we have provided many updates to PHGrid documentation (technical and project) posted to the PHGrid wiki (http://wiki.phgrid.net), and to PHGrid-related software in the Google code repository. If anyone has any questions relating to this change, or PHGrid software / services, please don't hesitate to contact me. We look forward to continued PHGrid research activities upon completion of the reorganization. It has been my sincere pleasure to work with the NCPHI PHGrid team (Brian, John, Peter, Dan, Chris, Moses, Joseph).

-- Tom

Monday, October 19, 2009

So long and thanks for all the fish...

Thank you to everyone I've worked with over the years as part of the public health informatics research grid project. I've met some extremely bright individuals and had a chance to collaborate with some extremely rare organizations and groups.

Although moving off the project formally (i.e. I won't get paid for contributing), I'll still be participating through the loose system of collaboration that the project uses to create the blog, wiki and software elements.

Although moving off the project formally (i.e. I won't get paid for contributing), I'll still be participating through the loose system of collaboration that the project uses to create the blog, wiki and software elements.

Saturday, October 17, 2009

Grid Computing Technologies for Geospatial Apps

Grid Computing Technologies for Geospatial Applications:

http://ifgi.uni-muenster.de/0/agile/

The gallery is here: http://ifgi.uni-muenster.de/0/agile/gallery.html

Standing room only !! or maybe just grab an open seat....

http://ifgi.uni-muenster.de/0/agile/

The gallery is here: http://ifgi.uni-muenster.de/0/agile/gallery.html

Standing room only !! or maybe just grab an open seat....

Friday, October 16, 2009

Project statistics

While creating the transition documentation, I ran some stats on the active code base (not including everying in old-projects) using cloc.

I'm not really a fan of measuring quality by number of lines of code (since good programmers produce fewer lines of code than bad but busy programmers), and a lot of this is boilerplate, etc. But I think it's worth noting that with just a limited team, we made 282 classes with 25k lines of Java, 2k lines of JSP, 6k lines of comments and documentation. Nothing massive, but it's a decent body of work.

I'm not really a fan of measuring quality by number of lines of code (since good programmers produce fewer lines of code than bad but busy programmers), and a lot of this is boilerplate, etc. But I think it's worth noting that with just a limited team, we made 282 classes with 25k lines of Java, 2k lines of JSP, 6k lines of comments and documentation. Nothing massive, but it's a decent body of work.

| Language | files | blank | comment | code |

| HTML | 579 | 19160 | 9724 | 161640 |

| Java | 282 | 5420 | 5594 | 25656 |

| Javascript | 35 | 3098 | 2779 | 14490 |

| XML | 136 | 956 | 998 | 9448 |

| XSD | 20 | 146 | 99 | 4228 |

| SQL | 126 | 425 | 769 | 3840 |

| JSP | 20 | 357 | 95 | 2368 |

| CSS | 12 | 158 | 140 | 1460 |

| Bourne Shell | 1 | 2 | 0 | 7 |

| SUM: | 1211 | 29722 | 20198 | 223137 |

Wednesday, October 14, 2009

Enhanced PHGrid portal wireframe

So, as a step better than a jpg, the PHGrid portal demo is now web-based. Kudos to Chris.

Click HERE to launch...

Click HERE to launch...

Code transfer

I finished moving all of the active source projects from sourceforge to google code to support our transitioning off the project.

The following subprojects have been successfully moved (GIPSEPoisonService, GIPSEService, GIPSEServiceInstaller, gmap-polygon, gridviewer were all moved prevously:

The sf projects will be left intact so as not to break any links, but all activity will be made on the google side from today onward.

The following subprojects have been successfully moved (GIPSEPoisonService, GIPSEService, GIPSEServiceInstaller, gmap-polygon, gridviewer were all moved prevously:

- GridMedlee: from sf to gc.

- PHGridLanding: from sf to gc.

- SecureSimpleTransfer: from sf to gc.

- gipse-dbimporter: from sf to gc.

- gipse-store: from sf to gc.

- gipse-poly-web: from sf to gc.

- loader-gmaps-poly: from sf to gc. (this includes the CSVs for settng up the gridviewer GIS tables)

- npds-gmaps: from sf to gc.

- npds-gmaps-web: from sf to gc.

- poicondai: from sf to gc.)

- schemas: from sf to gc. (this includes the schemas and example xml for the GIPSE services)

The sf projects will be left intact so as not to break any links, but all activity will be made on the google side from today onward.

Labels:

googlecode,

sourceforge,

subversion,

transition

Successful GIPSEService test

Forgot to post that last week Ron Price and I successfully tested a deployment of the GIPSEService at the Denver DOH.

Ron, working with Art Davidson, set up a synthetic aggregate data set and then he deployed an instance of the GIPSEService (8/31 gipse spec from the SVN repository).

I was then able to submit a test query from the NCPHI Lab using lab credentials that was successfully processed by the GIPSEService and sent back a response document containing the relevant observation set. Also, I tested with inappropriate credentials from unauthorized locations and I was not able to access the service (as expected since Ron's security controls prevent access by unauthorized users or locations).

Ron, working with Art Davidson, set up a synthetic aggregate data set and then he deployed an instance of the GIPSEService (8/31 gipse spec from the SVN repository).

I was then able to submit a test query from the NCPHI Lab using lab credentials that was successfully processed by the GIPSEService and sent back a response document containing the relevant observation set. Also, I tested with inappropriate credentials from unauthorized locations and I was not able to access the service (as expected since Ron's security controls prevent access by unauthorized users or locations).

Friday, October 9, 2009

Thursday, October 8, 2009

GAARDS Security implementation.

So, my next task for the coming months is to learn, tinker-with, and hopefully implement some cool bits of the GAARDS service as made by the folks up at Ohio State and their work with CaBIG and CaGrid. After a few preliminary readings of white papers and discussions with other people who have investigated various security models, I'm going to try and summarize things as I understand them, and invite people to correct my summarizations...

Globus works with X.509 certificates. To save a lot of complicated two-stepping, I'd say the easiest way to think of a certificate is as a licence with a special key embedded in them. Two nodes wanting to talk to each other have to present their certificates order to access services and establish secure communication, and the nodes have to "trust" each others certificates.

The way to get "automatic" trust without having to add keys into individual trust stores would be to have all the certificates issued by a trusted third party like Verisign or Thawte. This is like getting a passport or a drivers license as ID instead of having a business card with your name on it. It is also expensive, and to have to do it for every node on the grid beyond 5-node grids is pretty much unscalable.

Enter Dorian. Dorian is a GAARDS component and is essentially a Grid Service that allows other authentication methods to be used to access the grid. On one hand, it allows for someone to say "people authenticated by [method] at [node] are allowed to access these grid services". Thus, instead of having to have a certificate, one might just need to enter a username and password, or use their operating system credentials, or use a certificate issued by the Node itself instead of a larger third party.

The other critical component is Grid Trust Services (GTS) which allows for grids with different certificate sets to talk to each other and delegate which services on each grid are available to others. It also performs important syncing functions so that updates to access and authentication chains are propagated through the different grids.

There are other bits too, like GridGrouper which allows for simpler group paradigms (members of the group 'Gridviewer' would be able to access various gridviewers on different nodes... ) and Web Service Single Sign On which would allow an easy port for web applications to gain access to grid services... and you can read about it at the GAARDS website

Either way, I am at the periphery of understanding right now. I hope within a couple of days to have a really good grip on how security works now (and it's limitations) use cases for what we need, and a stronger correlation to how GAARDS will answer those use cases and which components are needed to do it.

Then, over the next couple of months, I'll need to implement those pieces and see what service modifications are needed to use them.

Globus works with X.509 certificates. To save a lot of complicated two-stepping, I'd say the easiest way to think of a certificate is as a licence with a special key embedded in them. Two nodes wanting to talk to each other have to present their certificates order to access services and establish secure communication, and the nodes have to "trust" each others certificates.

The way to get "automatic" trust without having to add keys into individual trust stores would be to have all the certificates issued by a trusted third party like Verisign or Thawte. This is like getting a passport or a drivers license as ID instead of having a business card with your name on it. It is also expensive, and to have to do it for every node on the grid beyond 5-node grids is pretty much unscalable.

Enter Dorian. Dorian is a GAARDS component and is essentially a Grid Service that allows other authentication methods to be used to access the grid. On one hand, it allows for someone to say "people authenticated by [method] at [node] are allowed to access these grid services". Thus, instead of having to have a certificate, one might just need to enter a username and password, or use their operating system credentials, or use a certificate issued by the Node itself instead of a larger third party.

The other critical component is Grid Trust Services (GTS) which allows for grids with different certificate sets to talk to each other and delegate which services on each grid are available to others. It also performs important syncing functions so that updates to access and authentication chains are propagated through the different grids.

There are other bits too, like GridGrouper which allows for simpler group paradigms (members of the group 'Gridviewer' would be able to access various gridviewers on different nodes... ) and Web Service Single Sign On which would allow an easy port for web applications to gain access to grid services... and you can read about it at the GAARDS website

Either way, I am at the periphery of understanding right now. I hope within a couple of days to have a really good grip on how security works now (and it's limitations) use cases for what we need, and a stronger correlation to how GAARDS will answer those use cases and which components are needed to do it.

Then, over the next couple of months, I'll need to implement those pieces and see what service modifications are needed to use them.

Labels:

dorian gridgrouper introduce gaards,

GBC PoC,

GlobUS

Wednesday, October 7, 2009

GIPSEPoison service move, and GIPSE Service Installers

The GIPSEPoison Service has now moved over to the google code repository (you can check out a read-only copy from http://phgrid.googlecode.com/svn/GIPSEPoisonService/trunk).

But, one of the things that I have been doing has been making an ant-fueled bundle that will install both the GIPSEPoisonService and the GIPSEService with a single ant command. You can read about that here: http://sites.google.com/site/phgrid/Home/service-registry/gipseserviceinstaller.

It essentially uses a properties file to download code from a repository, deploys other service-specific properties files to the downloaded code, and then calls the downloaded code's build and deploy scripts.

The idea is that for future service deploys, I can just email Dan a zip with the appropriate properties files and say "unzip this as [user] and then run 'ant all'". My next hope is to try and see if I can make a mvn based download script so that I can make similar installers for things like GIPSEService. The other cool thing is that both ant and mvn should be easily callable by the NSIS installer that Dan found.

But, one of the things that I have been doing has been making an ant-fueled bundle that will install both the GIPSEPoisonService and the GIPSEService with a single ant command. You can read about that here: http://sites.google.com/site/phgrid/Home/service-registry/gipseserviceinstaller.

It essentially uses a properties file to download code from a repository, deploys other service-specific properties files to the downloaded code, and then calls the downloaded code's build and deploy scripts.

The idea is that for future service deploys, I can just email Dan a zip with the appropriate properties files and say "unzip this as [user] and then run 'ant all'". My next hope is to try and see if I can make a mvn based download script so that I can make similar installers for things like GIPSEService. The other cool thing is that both ant and mvn should be easily callable by the NSIS installer that Dan found.

Labels:

GBC PoC,

GIPSEPoison,

GIPSEService,

installer

Monday, October 5, 2009

Open Source Installshield Equivalent

In an effort to reduce the complexity of installing grid nodes, I have been evaluating Open Source products that will allow us to create an automated installer. The goal is to have a user download grid software from PHgid and execute the install program that would install a node with minimal user interaction.

Nullsoft Scriptable Install System (NSIS) is one noteworthy product that stands up to this task. NSIS requires low system overhead, it's Windows compatible, scriptable, and it supports multiple compression methods.

Screenshots and additional information can be found at: http://nsis.sourceforge.net/Screenshots

Nullsoft Scriptable Install System (NSIS) is one noteworthy product that stands up to this task. NSIS requires low system overhead, it's Windows compatible, scriptable, and it supports multiple compression methods.

Screenshots and additional information can be found at: http://nsis.sourceforge.net/Screenshots

Friday, October 2, 2009

More code migration

I just finished moving over two additional subprojects from our sourceforge repository to the newer google code repository.

From now on, please access gridviewer and gmap-polygon through google code. The sf version will stick around for a while to minimize inconvenience of repository switching, but eventually it will be replaced with just a pointer to the google code repository.

We're switching to google's site for a few minor reasons: 1) the issue tracking / wiki software is better. It lets you create pretty clean workflows through their tagging system; 2) the code review feature is useful; 3) their Subversion server is quite faster than sourceforge's; and 4) it's easy to switch, so we can switch back to sourceforge if it becomes better.

Note, we're not moving everything over to google code (yet) so there will be some time until all the subprojects come over.

From now on, please access gridviewer and gmap-polygon through google code. The sf version will stick around for a while to minimize inconvenience of repository switching, but eventually it will be replaced with just a pointer to the google code repository.

We're switching to google's site for a few minor reasons: 1) the issue tracking / wiki software is better. It lets you create pretty clean workflows through their tagging system; 2) the code review feature is useful; 3) their Subversion server is quite faster than sourceforge's; and 4) it's easy to switch, so we can switch back to sourceforge if it becomes better.

Note, we're not moving everything over to google code (yet) so there will be some time until all the subprojects come over.

Labels:

googlecode,

Programming,

source code,

sourceforge,

subversion,

svn

Thursday, October 1, 2009

Gridviewer Updates

Gridviewer was updated with the following changes(enhancements):

http://ncphi.phgrid.net:8080/gridviewer/

- Default state of region query boxes is hidden. Seems to load cleaner.

- GET Requests are now supported, meaning you can use a URL to generate a specific query.

- Email link: Added an email link which generates a shorten URL (using bit.ly) and opens a pre-formatted email.

- Light modifications to javascript for performance and general file size reductions.

http://ncphi.phgrid.net:8080/gridviewer/

Tuesday, September 29, 2009

GIPSEPoison supporting zipcodes, now onto easier installers

So, after a lot of planning, I finally managed to get general zip5 and zip3 support working in the GIPSEPoison service. I got it so that the service would only ever have to make two calls to the NPDS-poison service (one for states, one for zips) and that it could return zip3 and zip5 and state results simultaneously, and checked in the code after testing it.

The next thing I am planning to work on is some ant scripting for building and deploying the service in one simple command. The hope is that it will turn the install process for this service into a simple command thus turning about ten steps into about a two step process (one if you are repeating the process or just installing a patch).

Otherwise, it seems the farther future involves the generation of more services, more intricate installers, portals and other service/viewer aggregators. My hope is to just start creating things that make fetching and installing these items as easy as possible.

Cheers

The next thing I am planning to work on is some ant scripting for building and deploying the service in one simple command. The hope is that it will turn the install process for this service into a simple command thus turning about ten steps into about a two step process (one if you are repeating the process or just installing a patch).

Otherwise, it seems the farther future involves the generation of more services, more intricate installers, portals and other service/viewer aggregators. My hope is to just start creating things that make fetching and installing these items as easy as possible.

Cheers

Monday, September 28, 2009

Unsupported Class Version Error

The error:

Exception in thread "main" java.lang.UnsupportedClassVersionError: Bad version number in .class file

Explanation:

This happens when you have compiled a jar file with a newer version of Java and executing it with an older version of Java.

The Solution:

Either upgrade that Java version on the executing machine or recompile the code with the correct version.

Exception in thread "main" java.lang.UnsupportedClassVersionError: Bad version number in .class file

Explanation:

This happens when you have compiled a jar file with a newer version of Java and executing it with an older version of Java.

The Solution:

Either upgrade that Java version on the executing machine or recompile the code with the correct version.

Friday, September 25, 2009

Gridviewer deployment

The latest version of Gridviewer has been deployed this morning on the training node. It has all the latest security additions.

http://ncphi.phgrid.net:8080/gridviewer/

http://ncphi.phgrid.net:8080/gridviewer/

Thursday, September 24, 2009

Invalid Encoding Name in Tomcat 6

Here's an error message you may come across when deploying Globus to Tomcat 6. This error message happens when Tomcat is started.

SEVERE: Parse Fatal Error at line 1 column 40: Invalid encoding name "cp1252".org.xml.sax.SAXParseException: Invalid encoding name "cp1252".

Use the following procedure to correct the error:

1. Change directory to %CATALINA_HOME%\conf

2. Open tomcat-users.xml in a text editor

3. Look at the first line of the file and change encoding='cp1252' to encoding='utf-8'

4. Save the file and restart Tomcat

SEVERE: Parse Fatal Error at line 1 column 40: Invalid encoding name "cp1252".org.xml.sax.SAXParseException: Invalid encoding name "cp1252".

Use the following procedure to correct the error:

1. Change directory to %CATALINA_HOME%\conf

2. Open tomcat-users.xml in a text editor

3. Look at the first line of the file and change encoding='cp1252' to encoding='utf-8'

4. Save the file and restart Tomcat

A web-based portal for PHGrid - Initial screenshots for discussion

I keep thinking about the public health workforce….and their most-likely perspective on our PHGrid activity. My thought is, what matters to them is that they are provided a new, robust and intuitive resource at their disposal that makes their work easier - not that it's a cool technology based on the globus toolkit, and leverages grid computing.

Thus, to better demonstrate to the public heath community the potential capability of the PHGrid architecture and ecosystem, I created some wireframe mockups articulating my thoughts around a web-based PHGrid portal. The goal of this is to demonstrate to users that PHGrid is not just about 1 GIPSE (aggregate data) service and 1 web-based geographic mapping "viewer" (i.e., a PHGrid Gadget)- but about a dynamic ecosystem potentially consisting of hundreds of different PHGrid resources (services, applications, Gadgets, etc) created by many and shared among many. This portal would provide a user-friendly, single, secure, access point to PHGrid resources (services, applications, Gadgets, Data, Computational power, etc.).

These wireframe screenshots are very crude….have many errrors, and are in no way exhaustive. They are at least a starting point for discussion.

Of course, there may end up being many more features of the portal - but I really do see it requiring 3 fundamental components:

1. General, secure, customizable PHGrid dashboard. I see this as a combination of MyYahoo, iGoogle, and iTunes for PHGrid. It combines, for example, social networking, eLearning, news, alerting, and statistics.

2. Real-time directory of available PHGrid resources. This combines features of an automated standards-based (UDDI) registry with integrated social aspects of eBay and Amazon.com. Users can quickly look for specific resources (services, applications, etc), examine their strengths and weaknesses, and ultimately request access to the resource. In other words, this part of the portal provides user-friendly access to a dynamic PHGrid resource ecosystem.

3. MyGrid - A user-defined PHGrid "workbench." This part of the portal allows users to customize a pallet of resources which are important / relevant to them - and which simplifies the process of organizing PHGrid resources.

Depending on the access control requirements - the user may be able to obtain immediate access to the resource- or may have to wait for the resource provider to grant access. Some resources may even require a document to be filled out and submitted. As can be seen in the Access Status feature of the site, this feature will allow the requesting user to monitor the status of his/her request - regardless of the specific process. Once the user has been granted authorization, he/she can use the Service/Gadget Automation tool to create ad-hoc and recurrent workflows / macros - tying together multiple resources. This would have aspects of both the taverna workbench and yahoo pipes. Clearly this area needs a lot of work..but I feel it may grow into a much larger aspect of the site.

Here is an example of a workflow (i.e., a macro) that could be created and saved - to be run at any time - or automated to run on a recurrent based:

After logging into the system with their secure credentials, a state epidemiologist goes to the MyGrid part of the portal. They then complete the following steps:

1. Requests specific data elements from data source X [service a]

2. Combines this data with data from source y [service b]

3. Runs a Natural Language Processing (NLP) engine on one data field from source y to convert a large chunk of text from a family history field into discrete coded data elements [service c]

4. Performs geospatial analytics on the newly generated data set z [service d]

5. Visualizes the analytic output using specific criterial [gadget e]

6. Creates images from the visualization tool and exports them to a web-based tool to be accessed by his/her colleagues at the local and county health departments within that state [service f and gadget g]

7. The user saves this workflow, and configures it to run every night at midnight.

I look forward to others thoughts on this. My hope is that we can create a very rough mock-up of this in the near future. It's my belief that it is only through the creation of a resource such as this, that we can clearly articulate the real value of PHGrid's robust, secure, SOA-based architecture / ecosystem to the overall public health community, and not just to the IT and informatics savvy public health workforce.

Thanks! Tom

Thus, to better demonstrate to the public heath community the potential capability of the PHGrid architecture and ecosystem, I created some wireframe mockups articulating my thoughts around a web-based PHGrid portal. The goal of this is to demonstrate to users that PHGrid is not just about 1 GIPSE (aggregate data) service and 1 web-based geographic mapping "viewer" (i.e., a PHGrid Gadget)- but about a dynamic ecosystem potentially consisting of hundreds of different PHGrid resources (services, applications, Gadgets, etc) created by many and shared among many. This portal would provide a user-friendly, single, secure, access point to PHGrid resources (services, applications, Gadgets, Data, Computational power, etc.).

These wireframe screenshots are very crude….have many errrors, and are in no way exhaustive. They are at least a starting point for discussion.

Of course, there may end up being many more features of the portal - but I really do see it requiring 3 fundamental components:

1. General, secure, customizable PHGrid dashboard. I see this as a combination of MyYahoo, iGoogle, and iTunes for PHGrid. It combines, for example, social networking, eLearning, news, alerting, and statistics.

2. Real-time directory of available PHGrid resources. This combines features of an automated standards-based (UDDI) registry with integrated social aspects of eBay and Amazon.com. Users can quickly look for specific resources (services, applications, etc), examine their strengths and weaknesses, and ultimately request access to the resource. In other words, this part of the portal provides user-friendly access to a dynamic PHGrid resource ecosystem.

3. MyGrid - A user-defined PHGrid "workbench." This part of the portal allows users to customize a pallet of resources which are important / relevant to them - and which simplifies the process of organizing PHGrid resources.

Depending on the access control requirements - the user may be able to obtain immediate access to the resource- or may have to wait for the resource provider to grant access. Some resources may even require a document to be filled out and submitted. As can be seen in the Access Status feature of the site, this feature will allow the requesting user to monitor the status of his/her request - regardless of the specific process. Once the user has been granted authorization, he/she can use the Service/Gadget Automation tool to create ad-hoc and recurrent workflows / macros - tying together multiple resources. This would have aspects of both the taverna workbench and yahoo pipes. Clearly this area needs a lot of work..but I feel it may grow into a much larger aspect of the site.

Here is an example of a workflow (i.e., a macro) that could be created and saved - to be run at any time - or automated to run on a recurrent based:

After logging into the system with their secure credentials, a state epidemiologist goes to the MyGrid part of the portal. They then complete the following steps:

1. Requests specific data elements from data source X [service a]

2. Combines this data with data from source y [service b]

3. Runs a Natural Language Processing (NLP) engine on one data field from source y to convert a large chunk of text from a family history field into discrete coded data elements [service c]

4. Performs geospatial analytics on the newly generated data set z [service d]

5. Visualizes the analytic output using specific criterial [gadget e]

6. Creates images from the visualization tool and exports them to a web-based tool to be accessed by his/her colleagues at the local and county health departments within that state [service f and gadget g]

7. The user saves this workflow, and configures it to run every night at midnight.

I look forward to others thoughts on this. My hope is that we can create a very rough mock-up of this in the near future. It's my belief that it is only through the creation of a resource such as this, that we can clearly articulate the real value of PHGrid's robust, secure, SOA-based architecture / ecosystem to the overall public health community, and not just to the IT and informatics savvy public health workforce.

Thanks! Tom

Wednesday, September 23, 2009

Many SDN deploys and GIPSEPoison tweaks

So, I finally got the "All Clinical Effects" element working in GIPSEPison... which effectively, if selected, tells the poison service to return all the human call volume for a given region instead of a particular clinical effect.

The next step is to get zip5 and zip3 working. I plan to do it the same way I did it in quicksilver: ask for all the counts of a state and then filter out the zipcodes I don't need. If zip5 is selected, just copy into the observations, but if zip3 is selected, bucket aggregate the zip5s. Ironically, having the whole state return grouped by zipcode is a lot easier than trying to list a long set of zip5s for an entire state (as would be the case with most gridviewer zip3 searches).

I also made a little zip3 to state properties file... in the hopes of not needing the geolocation database (and saving installation steps).

Otherwise, I have also been helping a lot with deploys and scans for various targets... which has caused lots of discussions about ways of automating things so that less time is taken up by these things which are going to be more frequent. The hope is that we can just tell someone about a build label and have someone hit "go" with that variable set and whatever needs to be deployed will get deployed automagically.

Either way, that is not the only improvement being discussed. I get the feeling that soon next week a whole lot of cool new services and visualizations are going to start getting hammered onto and into various design tables.

Cheers,

Peter

The next step is to get zip5 and zip3 working. I plan to do it the same way I did it in quicksilver: ask for all the counts of a state and then filter out the zipcodes I don't need. If zip5 is selected, just copy into the observations, but if zip3 is selected, bucket aggregate the zip5s. Ironically, having the whole state return grouped by zipcode is a lot easier than trying to list a long set of zip5s for an entire state (as would be the case with most gridviewer zip3 searches).

I also made a little zip3 to state properties file... in the hopes of not needing the geolocation database (and saving installation steps).

Otherwise, I have also been helping a lot with deploys and scans for various targets... which has caused lots of discussions about ways of automating things so that less time is taken up by these things which are going to be more frequent. The hope is that we can just tell someone about a build label and have someone hit "go" with that variable set and whatever needs to be deployed will get deployed automagically.

Either way, that is not the only improvement being discussed. I get the feeling that soon next week a whole lot of cool new services and visualizations are going to start getting hammered onto and into various design tables.

Cheers,

Peter

Tuesday, September 22, 2009

H1N1 Dashboard

While not technicaly PHGrid, the Novel H1N1 Collaboration Project's Enrollment Dashboard is an interesting approach to data visualization that may filter into PHGrid prototypes for visualization.

You won't be able to view the dashboard since it's not public, but the multi-layer google map is pretty interesting.

You won't be able to view the dashboard since it's not public, but the multi-layer google map is pretty interesting.

Saturday, September 19, 2009

neuGRID Project Video Demonstration

A video demonstration of the neuGRID project and platform is available

at: http://www.youtube.com/watch?v=fpfD6GZ90tQ&v=30

for more information, contact:

David Manset

CEO MAAT France (maat Gknowledge Group)

Immeuble Alliance Entrée A,

74160 Archamps (France)

Mob. 0034 687 802 661

Tel. 0033 450 439 602

Fax. 0033 450 439 601

dmanset@maat-g.com www.maat-g.com

Friday, September 18, 2009

Gridviewer improvements

We were able to complete a few substantial improvements to the Gridviewer application. The changes listed below were deployed on the training node (http://ncphi.phgrid.net:8080/gridviewer/):

- Added download csv extract functionality per query request. (Click on Download Data to get an extract)

- Added HTML data table display with sortable columns per individual query request as well as a combined data set . This feature is currently needing improvements in speed. Further improvements could contain subtotaling by regions or dates. ( Click on Display data link to see control. )

- Corrected bug in the console log with the time displays.

- Appended build version on the title and header.

Wednesday, September 16, 2009

GSA launches portal where agencies can buy cloud computing services

Full article here:

Kundra's great experiment: Government apps 'store front' opens for business

http://fcw.com/articles/2009/09/15/gov-apps-store.aspx?s=fcwdaily_160909

Direct link to the new service:

Tuesday, September 15, 2009

GIPSE Request Examples

I've received another requests for better documentation on the GIPSEService requests (what stratifiers are used for what, better examples, etc.). So I will create something soon and post to this blog.

In the meantime, the JUnit tests run through a series of examples in google code that may help a little against the NCPHI node.

In the meantime, the JUnit tests run through a series of examples in google code that may help a little against the NCPHI node.

Friday, September 11, 2009

Got GIPSEPoison working on the training node.

So, after many months of on-again, off-again trying... I finally managed to figure out the library conflict on the training node that was preventing GIPSEPoison from loading up on the training node.

The library ended up being WSDL4J. The CXF libraries being used by Jeremy Espino's awesome NPDS Client needed a newer version of wsdl4j than the one that was provided with Globus. Luckily, the newer version seems backwards compatible.

Thus, GIPSEPoison can now be seen as an option in http://ncphi.phgrid.net:8080/gridviewer/

If you read some of my earlier posts, you'll know that the first step was to import the libraries separately (rather than as a part of a Jar-with-dependencies). Otherwise, the error seemed to indicate a WSDL or some other parsing error, and WSDL4J was the first duplicate jar I tried.

The next couple of days are going to be spent fixing up GIPSE Poison. Giving it an "all clinical effects" option, and zip3 and zip5.

Cheers,

Peter

The library ended up being WSDL4J. The CXF libraries being used by Jeremy Espino's awesome NPDS Client needed a newer version of wsdl4j than the one that was provided with Globus. Luckily, the newer version seems backwards compatible.

Thus, GIPSEPoison can now be seen as an option in http://ncphi.phgrid.net:8080/gridviewer/

If you read some of my earlier posts, you'll know that the first step was to import the libraries separately (rather than as a part of a Jar-with-dependencies). Otherwise, the error seemed to indicate a WSDL or some other parsing error, and WSDL4J was the first duplicate jar I tried.

The next couple of days are going to be spent fixing up GIPSE Poison. Giving it an "all clinical effects" option, and zip3 and zip5.

Cheers,

Peter

Tuesday, September 8, 2009

PHIN Conference Thoughts.

Greetings all,

It has been a week since the PHIN conference started with a long weekend in-between, and today I wanted to list out the impressions I got from the crowd and the panels and the discussions I had there... Then I hope to expand a bit with my notes and more thoughts in future blog entries.

- Impression the first(e): Where are the users?

At least two of the panels I attended could be summarized as "this/those informatician(s) used that/those cool grid technolog(y/ies) and find it helpful in doing there work, now if we could only get more of those technologies and work on making them talk to each other". The panels showed me several things. The most important is that informaticians, doctors, and users in general knew that there was stuff out there and they were using it to make their lives easier (IE, "Peter feels validated"). The next coolest thing we learned is that the users had very good opinions and suggestions about how the products could be made to better suit their needs... and the final point I realized is that while many of the fine-grained steps in the process were different (each health department has a different end data format and a different way of describing what they consider to be 'flu'), a lot of the general needs were the same (speed, ease of use, customizability, ease of interaction with other services). I feel that this shows the need to find as many of the users as possible and to try and create a very good space for them to have their opinions and needs voiced, and hopefully help each other install and adjust GRID-like products to suit those needs.

The other user impression I got is that PHGrid, as users of things like Globus and CAGrid (Introduce), are getting a lot of things needed from those programmers and communities. Globus is coming out with cool new things that solve a lot of the old problems, CAGrid is improving Introduce to match the new version(s) of Globus and answering the "how do we create a grid without having to buy lots of expensive third party certificates and how do we simplify registration/addition of new nodes" problems. CAGrid is also looking into ways to remote-deploy services to user boxes so they can run analysis or other functions on data that cannot leave their organization.

- Impression the second(e): We should do the things to make it easy for users even if it makes our lives more difficult.

Several of the panels included the phrase "at our local health department... we tend to see flu like this... but next door, they see flu like this...". In short: "Two health departments, three classifications". In addition, local health departments are wary of attempts to take large chunks of their data so that someone else can re-classify the data. But, they are fine with setting up a service that only gives summary data (no patient info, just counts). Furthermore, they don't have much trouble going "when the service asks for an aggregate count of flu, we'll give them an aggregate count of what we think flu is". Furthermore, they like the idea of having services that allow them to re-organize or re-classify data so it matches a standard, so long as they don't have to email large files or mail DVDs to some place outside of their control.

This means, the biggest impediment to using these types of services is probably going to be the major learning curve required to install all the various toolkits (globus, CABig, tomcat, certificates) and the various configurational complexities to all the applications. The more time we spend making an installer, the less time we have to spend on the phone with interested health departments telling them how to set it up. That also goes for how easy we make it for health departments to write the queries into their own database(s) or datasets. Finally, we need to focus on allowing for customizable outputs from the service viewers we create. If someone can go to GridViewer and get a sample of data to make sure that the CSV we spit out will be able to be read by their analytics... that will help save them some time in a word-processor.

Thus, installers, view panes, things that centralize and simplify configuration and installation with the obvious stuff up front and the complicated stuff defaulted but in an obvious place for modification. Think firefox. Think google.

- Impression the Third(e): Everyone is really happy that all the stuff is relatively open, free (as in speech), and everyone is acquainted with each other and thinking of ways to collaborate and suggest improvements.

If we make a service builder, we will probably be extending CAGrid's introduce. Dr. Jeremy Espino is tweaking globus service projects with Ivy so one doesn't have to upload various libraries into a repository. Globus is new and probably going to be using CXF instead of Axis which will probably improve everything and make us impromptu beta-testers, and a lot of people seem to be marvelling at Quicksilver and Gridviewer and looking forward to their improvements.

So generally, the PHIN conference helped me focus on what I think we need to be doing for the next year, namely getting ready and making our stuff flexible enough for a lot of users to do a lot of different things to make their statistical and analysis efforts easier.

It has been a week since the PHIN conference started with a long weekend in-between, and today I wanted to list out the impressions I got from the crowd and the panels and the discussions I had there... Then I hope to expand a bit with my notes and more thoughts in future blog entries.

- Impression the first(e): Where are the users?

At least two of the panels I attended could be summarized as "this/those informatician(s) used that/those cool grid technolog(y/ies) and find it helpful in doing there work, now if we could only get more of those technologies and work on making them talk to each other". The panels showed me several things. The most important is that informaticians, doctors, and users in general knew that there was stuff out there and they were using it to make their lives easier (IE, "Peter feels validated"). The next coolest thing we learned is that the users had very good opinions and suggestions about how the products could be made to better suit their needs... and the final point I realized is that while many of the fine-grained steps in the process were different (each health department has a different end data format and a different way of describing what they consider to be 'flu'), a lot of the general needs were the same (speed, ease of use, customizability, ease of interaction with other services). I feel that this shows the need to find as many of the users as possible and to try and create a very good space for them to have their opinions and needs voiced, and hopefully help each other install and adjust GRID-like products to suit those needs.

The other user impression I got is that PHGrid, as users of things like Globus and CAGrid (Introduce), are getting a lot of things needed from those programmers and communities. Globus is coming out with cool new things that solve a lot of the old problems, CAGrid is improving Introduce to match the new version(s) of Globus and answering the "how do we create a grid without having to buy lots of expensive third party certificates and how do we simplify registration/addition of new nodes" problems. CAGrid is also looking into ways to remote-deploy services to user boxes so they can run analysis or other functions on data that cannot leave their organization.

- Impression the second(e): We should do the things to make it easy for users even if it makes our lives more difficult.

Several of the panels included the phrase "at our local health department... we tend to see flu like this... but next door, they see flu like this...". In short: "Two health departments, three classifications". In addition, local health departments are wary of attempts to take large chunks of their data so that someone else can re-classify the data. But, they are fine with setting up a service that only gives summary data (no patient info, just counts). Furthermore, they don't have much trouble going "when the service asks for an aggregate count of flu, we'll give them an aggregate count of what we think flu is". Furthermore, they like the idea of having services that allow them to re-organize or re-classify data so it matches a standard, so long as they don't have to email large files or mail DVDs to some place outside of their control.

This means, the biggest impediment to using these types of services is probably going to be the major learning curve required to install all the various toolkits (globus, CABig, tomcat, certificates) and the various configurational complexities to all the applications. The more time we spend making an installer, the less time we have to spend on the phone with interested health departments telling them how to set it up. That also goes for how easy we make it for health departments to write the queries into their own database(s) or datasets. Finally, we need to focus on allowing for customizable outputs from the service viewers we create. If someone can go to GridViewer and get a sample of data to make sure that the CSV we spit out will be able to be read by their analytics... that will help save them some time in a word-processor.

Thus, installers, view panes, things that centralize and simplify configuration and installation with the obvious stuff up front and the complicated stuff defaulted but in an obvious place for modification. Think firefox. Think google.

- Impression the Third(e): Everyone is really happy that all the stuff is relatively open, free (as in speech), and everyone is acquainted with each other and thinking of ways to collaborate and suggest improvements.

If we make a service builder, we will probably be extending CAGrid's introduce. Dr. Jeremy Espino is tweaking globus service projects with Ivy so one doesn't have to upload various libraries into a repository. Globus is new and probably going to be using CXF instead of Axis which will probably improve everything and make us impromptu beta-testers, and a lot of people seem to be marvelling at Quicksilver and Gridviewer and looking forward to their improvements.

So generally, the PHIN conference helped me focus on what I think we need to be doing for the next year, namely getting ready and making our stuff flexible enough for a lot of users to do a lot of different things to make their statistical and analysis efforts easier.

Friday, September 4, 2009

Sparklines

This weeks build includes the following:

1. Moved flot chart out of Marker info window and into greybox for improved UI.

2. Corrected loading image bug

3. Corrected hidden markers reappearing when new query submitted bug

4. Corrected date range selection bug

5. Added sparkline graphs per region with ability to change chart type.

6. Improved show/hide on region selections and filter selections

7. Modified ajax request flot calls per changes on server-side for facilities/datasources

Not happy with the way the site is rendering in IE7, but it is an inferior product anyway. When possible, use Mozilla/Webkit..

1. Moved flot chart out of Marker info window and into greybox for improved UI.

2. Corrected loading image bug

3. Corrected hidden markers reappearing when new query submitted bug

4. Corrected date range selection bug

5. Added sparkline graphs per region with ability to change chart type.

6. Improved show/hide on region selections and filter selections

7. Modified ajax request flot calls per changes on server-side for facilities/datasources

Not happy with the way the site is rendering in IE7, but it is an inferior product anyway. When possible, use Mozilla/Webkit..

Tuesday, September 1, 2009

2nd iteration..always room for improvement

I am happy with the number of improvements made to the UI of gridviewer in the few weeks we had. We eliminated post-back calls to the server for every query, we developed custom pin overlays, added service areas and ages into the query parameters, redesigned the layout and made a considerable number of general aesthetic changes. This 2nd release represents what is possible utilizing agile and of course, javascript.

On that note, I would like to point out my current thoughts on future improvements.

1. Charts: The first gridviewer used the pin info windows to display region specific chart data. We maintained that in the 2nd release. The issue with this design is the info window is too large for the scale of the map. It is my opinion that we move the chart out of the map completely into a dynamic layer around the form. This would prevent all the dragging of the map to see the whole chart.

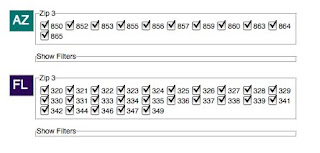

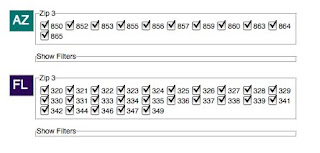

2. Primary navigation: I waver on how important the map actually is to the user. When I first began working on gridviewer, my original impression was the map should serve as the primary navigation, meaning they should use the map for drilling into all the query results. Now I actually believe the map is really a subordinate to the query result boxes (see image below). My opinion is we should expand the current boxes to include more informative data results such as simple bar charts showing zip totals, or possibly show a sparkline for each respective zip. A user should be able to click on AZ, get a chart for that collection as well as have the map zoom into that respective region. As the user selects items either pins on the map or check-boxes in the query boxes, each respective control is responding that event accordingly, meaning the map zooms in and out and the chart data changes based of the selected region. As others have mentioned, a data download should be available per query box as well.

3. Form

I wonder if we could reorganize the form selection box to have an "advanced options" selection which would reveal(show/hide) the age, dispositions and service areas. It seems that age and dispositions could default to all and service areas should mostly be driven by the classifier selection. It would be less intimating to the user and also would provide more space.

4. Comments

One suggestion was for commenting which I am really in favor of. I believe before we implement this we must have the ability for people to generate links to a saved query. It would not be a large hurdle to have one URL per query (box), but to have a single URL for multiple queries is going to represent a challenge. We could look to using something like ShareThis once we have the URL issue worked out.

On that note, I would like to point out my current thoughts on future improvements.

1. Charts: The first gridviewer used the pin info windows to display region specific chart data. We maintained that in the 2nd release. The issue with this design is the info window is too large for the scale of the map. It is my opinion that we move the chart out of the map completely into a dynamic layer around the form. This would prevent all the dragging of the map to see the whole chart.

2. Primary navigation: I waver on how important the map actually is to the user. When I first began working on gridviewer, my original impression was the map should serve as the primary navigation, meaning they should use the map for drilling into all the query results. Now I actually believe the map is really a subordinate to the query result boxes (see image below). My opinion is we should expand the current boxes to include more informative data results such as simple bar charts showing zip totals, or possibly show a sparkline for each respective zip. A user should be able to click on AZ, get a chart for that collection as well as have the map zoom into that respective region. As the user selects items either pins on the map or check-boxes in the query boxes, each respective control is responding that event accordingly, meaning the map zooms in and out and the chart data changes based of the selected region. As others have mentioned, a data download should be available per query box as well.

3. Form

I wonder if we could reorganize the form selection box to have an "advanced options" selection which would reveal(show/hide) the age, dispositions and service areas. It seems that age and dispositions could default to all and service areas should mostly be driven by the classifier selection. It would be less intimating to the user and also would provide more space.

4. Comments

One suggestion was for commenting which I am really in favor of. I believe before we implement this we must have the ability for people to generate links to a saved query. It would not be a large hurdle to have one URL per query (box), but to have a single URL for multiple queries is going to represent a challenge. We could look to using something like ShareThis once we have the URL issue worked out.

Monday, August 24, 2009

More updates for H1N1

I'm just putting out a general updates post since Peter and Chris haven't said anything yet.

Marcelo updated the GIPSEService google code project with some performance tuning and configuration enhancement changes. It won't affect the input/output but makes the code easier to maintain.

Chris and Peter put out an update to the gridViewer that looks pretty cool, but more importantly, allows you to overlay different indicators, data sources and services on top of or next to each other.

We also now have an EDVisits indicator so now calculations can be performed that require a denominator.

Marcelo updated the GIPSEService google code project with some performance tuning and configuration enhancement changes. It won't affect the input/output but makes the code easier to maintain.

Chris and Peter put out an update to the gridViewer that looks pretty cool, but more importantly, allows you to overlay different indicators, data sources and services on top of or next to each other.

We also now have an EDVisits indicator so now calculations can be performed that require a denominator.

Tuesday, August 18, 2009

Correcting Globus Handshake Errors

The Problem:

When Globus-WS is deployed to Tomcat 5.5.x within Windows, a Handshake error is thrown when secure Globus commands are issued. The secure commands run fine in a standalone container but fail when Globus is deployed to Tomcat.

Example:

C:\>counter-client -m conv -z none -s https://192.168.20.120:8443/wsrf/services/Se

cureCounterService

Error: ; nested exception is:

javax.xml.rpc.soap.SOAPFaultException: ; nested exception is:

org.globus.common.ChainedIOException: Authentication failed [Caused by:

Failure unspecified at GSS-API level [Caused by: Handshake failure]]

The Solution:

(Commands are based on JDK 1.5.x)

This is caused when the SSL client does not trust the CA that signed the certificate. The solution is to add the CA certificate as a trustedCA.

1. Create a Java Key Store:

keytool -genkey -alias servercert -keyalg RSA -dname "CN=Your_host_name, OU=yoursite.net, O=your_organization, L=city, ST=state C=country" -keypass changeit -keystore server.jks -storepass changeit

2. Create a PKCS12 Keystore:

keytool -genkey -alias globus -keystore globus.p12 -storetype pkcs12 -keyalg RSA -dname "CN=Your_host_name, OU=yoursite.net, O=your_organization, L=city, ST=state C=country" -keypass changeit -storepass changeit

3. Export your PKCS12 Keystore:

keytool -export -alias globus -file globus.cer -keystore globus.p12 -storetype pkcs12 -storepass changeit

4. Import your PKCS12 Ketstore file into you Java Keystore:

keytool -import -keystore server.jks -alias globus -file globus.cer -v -trustcacerts -noprompt -storepass changeit

5. Import the 3rd Party CA into your Java Keystore as a Trusted CA

keytool -import -keystore server.jks -alias globusCA -file c:\etc\grid-security\certificates\31f15ec4.0 -v -trustcacerts -noprompt -storepass changeit

6. Import the host certificate issued by the 3rd Party CA into your Java Keystore.

keytool -import -keystore server.jks -alias containercert -file c:\etc\grid-security\importcontainercert.pem -v -trustcacerts -noprompt -storepass changeit

Based on the proceedure above, your server.xml file should look like this:

port="8443" maxThreads="150"

minSpareThreads="25" maxSpareThreads="75"

autoFlush="true" disableUploadTimeout="true"

scheme="https" enableLookups="true"

acceptCount="10" debug="0"

protocolHandlerClassName="org.apache.coyote.http11.Http11Protocol"

socketFactory="org.globus.tomcat.catalina.net.BaseHTTPSServerSocketFactory"

keystoreFile="C:\apache-tomcat-5.5.27\conf\server.jks"

keystorePass="changeit"

cacertdir="c:\etc\grid-security\certificates"

encryption="true"/>

---------------------------------------------------------

The commands change slightly when using JDK 1.6.x.

1. Create a Java Key Store:

keytool -genkeypair -alias servercert -keyalg RSA -dname "CN=Your_host_name, OU=yoursite.net, O=your_organization, L=city, ST=state C=country" -keypass changeit -keystore server.jks -storepass changeit

2. Create a PKCS12 Keystore:

keytool -genkeypair -alias globus -keystore globus.p12 -storetype pkcs12 -keyalg RSA -dname "CN=Your_host_name, OU=yoursite.net, O=your_organization, L=city, ST=state C=country" -keypass changeit -storepass changeit

3. Export your PKCS12 Keystore.

keytool -exportcert -alias globus -file globus.cer -keystore globus.p12 -storetype pkcs12 -storepass changeit

4. Import your PKCS12 Ketstore file into you Java Keystore.

keytool -importcert -keystore server.jks -alias globus -file globus.cer -v -trustcacerts -noprompt -storepass changeit

5. Import the 3rd Party CA into your Java Keystore as a Trusted CA.

keytool -importcert -keystore server.jks -alias globusCA -file c:\etc\grid-security\certificates\31f15ec4.0 -v -trustcacerts -noprompt -storepass changeit

6. Import the host certificate issued by the 3rd Party CA into your Java Keystore.

keytool -importcert -keystore server.jks -alias containercert -file c:\etc\grid-security\importcontainercert.pem -v -trustcacerts -noprompt -storepass changeit

When Globus-WS is deployed to Tomcat 5.5.x within Windows, a Handshake error is thrown when secure Globus commands are issued. The secure commands run fine in a standalone container but fail when Globus is deployed to Tomcat.

Example:

C:\>counter-client -m conv -z none -s https://192.168.20.120:8443/wsrf/services/Se

cureCounterService

Error: ; nested exception is:

javax.xml.rpc.soap.SOAPFaultException: ; nested exception is:

org.globus.common.ChainedIOException: Authentication failed [Caused by:

Failure unspecified at GSS-API level [Caused by: Handshake failure]]

The Solution:

(Commands are based on JDK 1.5.x)

This is caused when the SSL client does not trust the CA that signed the certificate. The solution is to add the CA certificate as a trustedCA.

1. Create a Java Key Store:

keytool -genkey -alias servercert -keyalg RSA -dname "CN=Your_host_name, OU=yoursite.net, O=your_organization, L=city, ST=state C=country" -keypass changeit -keystore server.jks -storepass changeit

2. Create a PKCS12 Keystore:

keytool -genkey -alias globus -keystore globus.p12 -storetype pkcs12 -keyalg RSA -dname "CN=Your_host_name, OU=yoursite.net, O=your_organization, L=city, ST=state C=country" -keypass changeit -storepass changeit

3. Export your PKCS12 Keystore:

keytool -export -alias globus -file globus.cer -keystore globus.p12 -storetype pkcs12 -storepass changeit

4. Import your PKCS12 Ketstore file into you Java Keystore:

keytool -import -keystore server.jks -alias globus -file globus.cer -v -trustcacerts -noprompt -storepass changeit

5. Import the 3rd Party CA into your Java Keystore as a Trusted CA

keytool -import -keystore server.jks -alias globusCA -file c:\etc\grid-security\certificates\31f15ec4.0 -v -trustcacerts -noprompt -storepass changeit

6. Import the host certificate issued by the 3rd Party CA into your Java Keystore.

keytool -import -keystore server.jks -alias containercert -file c:\etc\grid-security\importcontainercert.pem -v -trustcacerts -noprompt -storepass changeit

Based on the proceedure above, your server.xml file should look like this:

port="8443" maxThreads="150"

minSpareThreads="25" maxSpareThreads="75"

autoFlush="true" disableUploadTimeout="true"

scheme="https" enableLookups="true"

acceptCount="10" debug="0"

protocolHandlerClassName="org.apache.coyote.http11.Http11Protocol"

socketFactory="org.globus.tomcat.catalina.net.BaseHTTPSServerSocketFactory"

keystoreFile="C:\apache-tomcat-5.5.27\conf\server.jks"

keystorePass="changeit"

cacertdir="c:\etc\grid-security\certificates"

encryption="true"/>

---------------------------------------------------------

The commands change slightly when using JDK 1.6.x.

1. Create a Java Key Store:

keytool -genkeypair -alias servercert -keyalg RSA -dname "CN=Your_host_name, OU=yoursite.net, O=your_organization, L=city, ST=state C=country" -keypass changeit -keystore server.jks -storepass changeit

2. Create a PKCS12 Keystore:

keytool -genkeypair -alias globus -keystore globus.p12 -storetype pkcs12 -keyalg RSA -dname "CN=Your_host_name, OU=yoursite.net, O=your_organization, L=city, ST=state C=country" -keypass changeit -storepass changeit

3. Export your PKCS12 Keystore.

keytool -exportcert -alias globus -file globus.cer -keystore globus.p12 -storetype pkcs12 -storepass changeit

4. Import your PKCS12 Ketstore file into you Java Keystore.

keytool -importcert -keystore server.jks -alias globus -file globus.cer -v -trustcacerts -noprompt -storepass changeit

5. Import the 3rd Party CA into your Java Keystore as a Trusted CA.

keytool -importcert -keystore server.jks -alias globusCA -file c:\etc\grid-security\certificates\31f15ec4.0 -v -trustcacerts -noprompt -storepass changeit

6. Import the host certificate issued by the 3rd Party CA into your Java Keystore.

keytool -importcert -keystore server.jks -alias containercert -file c:\etc\grid-security\importcontainercert.pem -v -trustcacerts -noprompt -storepass changeit

Monday, August 17, 2009

H1N1 Response

I just realized that I haven't made any posts about our H1N1 response. The grid team has been working with the rest of NCPHI preparing for the flu season and the H1N1 surveillance that will be required. H1N1 response has been consuming a lot of all of our time.

I've been working on a data format that is modeled after the DiSTRIBuTE data use agreement's Appendix A that is a form of "GIPSE Lite" that defines a CSV format that can be used for immediate H1N1 surveillance. The data format is available on the wiki.

This format can be used in the "Producer-Collector" style of GIPSE architecture where data sources wish to provide daily data feeds that produce a report for transfer to a collector node (such as DiSTRIBuTE or CDC's GIPSE store repository). This is showing to be a viable option for sites that do not wish to host a query service, which requires a dedicated web service host with inbound access on port 443.

This is also helpful as this is the first DUA from CDC I'm aware of that specifically addresses summary and aggregate data collection and transfer.

I've been working on a data format that is modeled after the DiSTRIBuTE data use agreement's Appendix A that is a form of "GIPSE Lite" that defines a CSV format that can be used for immediate H1N1 surveillance. The data format is available on the wiki.

This format can be used in the "Producer-Collector" style of GIPSE architecture where data sources wish to provide daily data feeds that produce a report for transfer to a collector node (such as DiSTRIBuTE or CDC's GIPSE store repository). This is showing to be a viable option for sites that do not wish to host a query service, which requires a dedicated web service host with inbound access on port 443.

This is also helpful as this is the first DUA from CDC I'm aware of that specifically addresses summary and aggregate data collection and transfer.

Sunday, August 16, 2009

Prepping for Monday.

Greetings!

Over the past couple of weeks I have been dealing with two fronts: On one front, GridViewer is being modified heavily with lots of cool new javascript and AJAX type functionality, so I have been making simple data-fetching interfaces as needed to support said functionality. On the other front, I have found out that GIPSEPoison works everywhere but the training node and have been investigating why.